If you work for a reasonable amount of time, the odds are high that you will work for some bad managers. I certainly have had what I consider a more than fair share of bad (and a couple of absolutely terrible managers). While I have felt anger, frustration, sadness, rage and all that about them for a period of time – I am also strangely grateful for the invaluable experience that taught me a lot about what not to do. I also readily acknowledge that I may not have avoided those pitfalls as a manager myself.

What is a manager’s primary role? At the simplest level – it’s about providing clear direction to the team working under their supervision. In my experience, the one consistent thing I have noticed amongst bad managers is that they had a lack of clarity on where to head. The way they compensated for that lack of clarity was usually why their actions made me think of them as bad managers

Imagine you are quite a good driver trying to drive a car from Phoenix to LA to visit Disney, but you don’t know where LA is relative to Phoenix or when you need to get there. Since you are a good driver – you drive defensively and at the optimum speed to maximize the mileage of your car. You refuse to ask for directions even after your passengers repeatedly beg you to do so. What are the odds that you reach LA? And would anyone enjoy being in that car? Would they ever trust you to even drive you across the street to a McDonald’s after that LA adventure?

Bad managers waste everyone’s time and build frustration because they try to optimize the wrong things and generally doesn’t accomplish the right results.

From an employee’s perspective – what characteristic makes them think of their boss as a bad manager the most?

I would think “micromanagement” wins that prize in a landslide.

There are two reasons managers tend to micromanage for extended periods of time in my experience

- They don’t have a great vision and hence the only thing they can manage is the process which they are masters of. This is also a major reason why great sellers and engineers don’t always become great sales and engineering managers

- They don’t have the skills to recruit, coach and manage people for the job at hand – and compensate by their own time and expertise. The day only has 24 hours and you will just run out the clock without having too much to show for the effort.

Most employees will try to – for at least the initial phase of working for a bad manager – ask questions and make suggestions. Unfortunately, bad managers typically are poor communicators. Some will give you answers that are vague and confusing, some only talk at you and won’t make it two way and some will either not talk at all or will only open their mouth for negative feedback. By wasting the opportunity to course correct and/or gain clarity – they make a bad situation worse

What’s the long term impact of bad managers?

If I could choose one word – I would say Toxicity !

They tend to take credit and deflect blame – and over time I think it happens less out of malice and mostly out of ignorance and lack of self awareness. Doesn’t matter why though – the effect on the team morale is the same.

Being a manager is hard – it’s difficult to balance empathy with business needs. But that’s the job – you can’t just shrug it off.

Unfortunately, toxicity sometimes get rewarded when business results are great. That happens more times than it should especially in larger organizations. This is quite simply a leadership failure.

What can we do about this?

Employees with bad managers : First you need to evaluate whether you are jumping the gun and just blaming the manager. I have done it and realized it later. I also have seen it hundreds of times as an up line manager. Having a network of mentors help a lot with getting an objective understanding and also in many cases will help mitigate the situation. Since power is asymmetrical in balance – if your concerns are not addressed, improve your skills and network with urgency and get the heck away from the toxic manger as quickly as you can. This is also why I am a big fan of constantly improving optionality in life – it helps us face adversity with minimum trouble

Managers themselves : Learn to listen – with the idea of understanding and not just to respond. Ask yourself the hard WHY questions. None of us are super objective about ourselves – so try to get 360 degree feedback to the extent you can. Maybe you are a good manager already – the feedback will make you a great manager. There is no downside really to listening, understanding and tweaking what you do.

One of the things that have made me a better manager is a continuous interest in learning the job of my manager more and more. That helps me understand why my boss wants me to do certain things and that in turn helps me give clear direction to my team.

One last thing – being a manager is usually motivated with the idea of making more money than you previously did. There is no shame in that at all. But if money is all you need and you hate being a manager – try talking to your upline managers on whether you can be a highly paid individual contributor. Failing that – look outside the company for such options. Life is too short to be miserable doing things you don’t like every day. As an up line manager, I usually ask a lot of questions when people ask me for promotions and career development advice . 90% of the time it’s just a proxy for making more money and there are many ways to make that money if you have honest conversations with your own managers. You will do yourselves and your teams a big favor.

Upline managers : You really are the biggest culprit if your chain of command has a lot of bad managers. You have the best chance to be objective compared to employees and their bad managers. You need to constantly listen – and proactively find out – how the culture of your team is evolving and make tweaks. If all you do is watch short term business results, you will often not realize the long term damage you do with your inaction. You have to assume that the aggregated info you see probably hides a lot of actual issues and unless you probe actively – you won’t see what needs to be fixed.

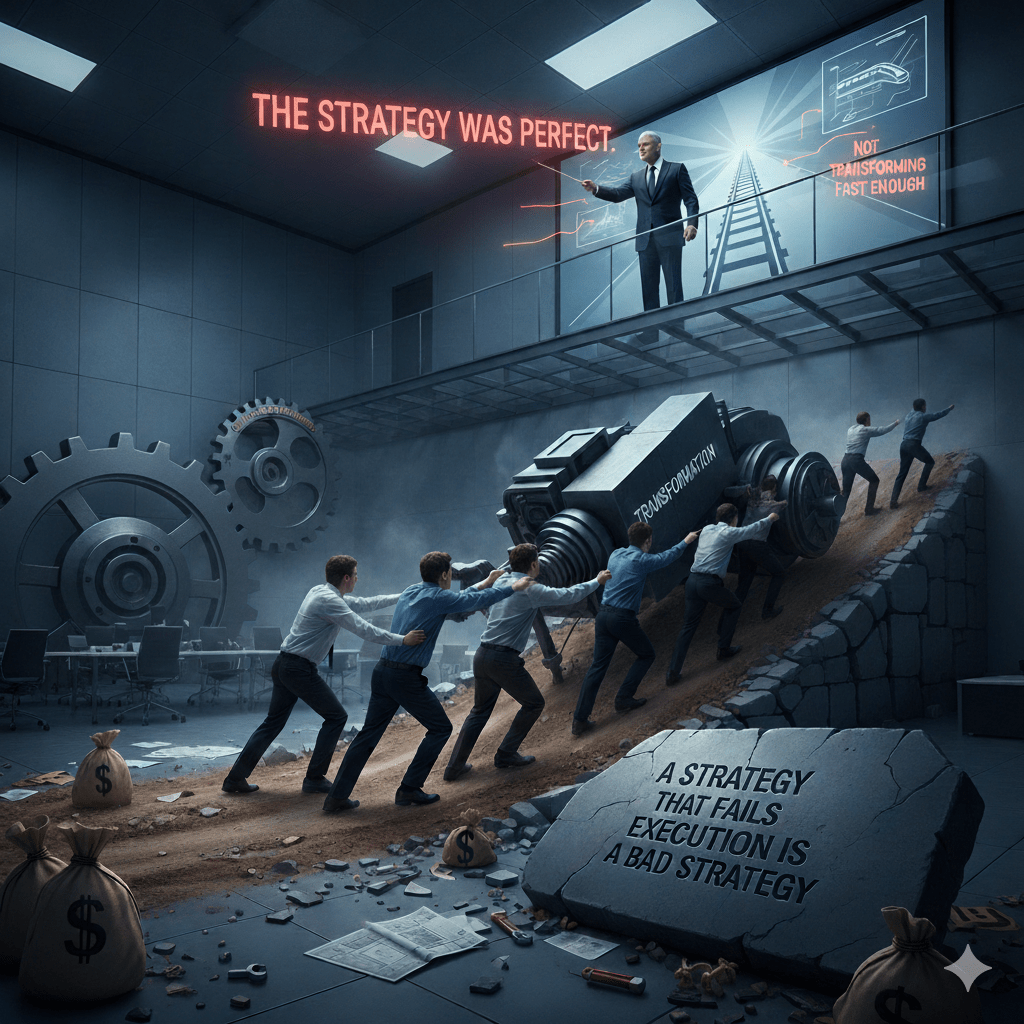

I don’t like senior leaders saying things like “the strategy was perfect and it was just an execution failure”. My strong belief on this matter is that a strategy that fails execution is a bad strategy – it just did not consider the constraints appropriately. How many times have we heard top leaders saying “we are not transforming fast enough” when they have not made the right changes and investments down the line to enable that transformation? . I often remind folks “The big boss is called the Chief Executive Officer for a reason – and the Chief Strategy officer works for the CEO, not the other way around”. Don’t get me wrong – you do need a strategy, but it needs to be grounded in reality !